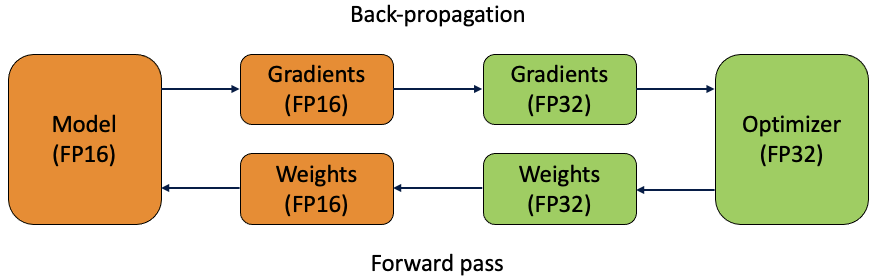

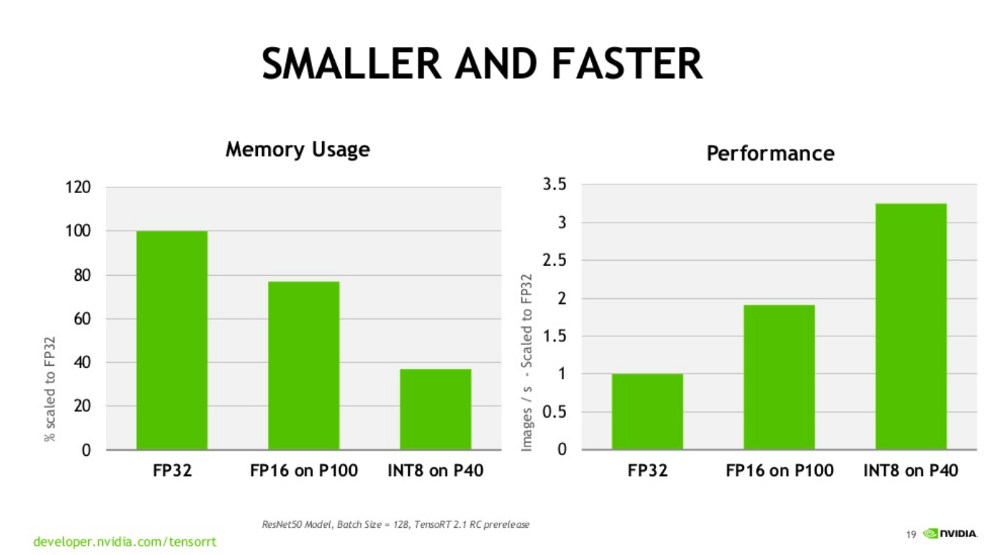

Revisiting Volta: How to Accelerate Deep Learning - The NVIDIA Titan V Deep Learning Deep Dive: It's All About The Tensor Cores

Revisiting Volta: How to Accelerate Deep Learning - The NVIDIA Titan V Deep Learning Deep Dive: It's All About The Tensor Cores

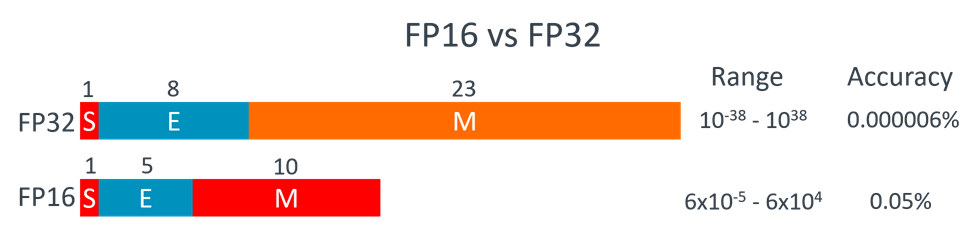

FP16 Throughput on GP104: Good for Compatibility (and Not Much Else) - The NVIDIA GeForce GTX 1080 & GTX 1070 Founders Editions Review: Kicking Off the FinFET Generation

FP16 Throughput on GP104: Good for Compatibility (and Not Much Else) - The NVIDIA GeForce GTX 1080 & GTX 1070 Founders Editions Review: Kicking Off the FinFET Generation

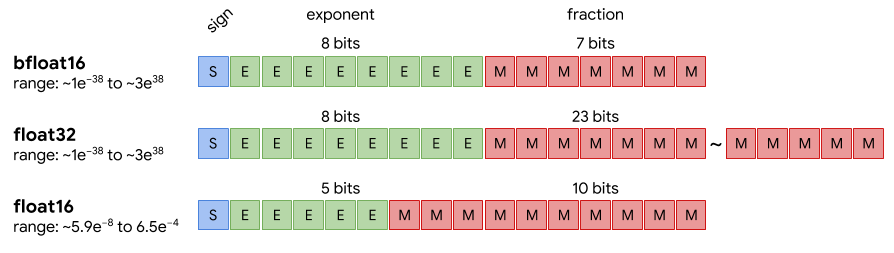

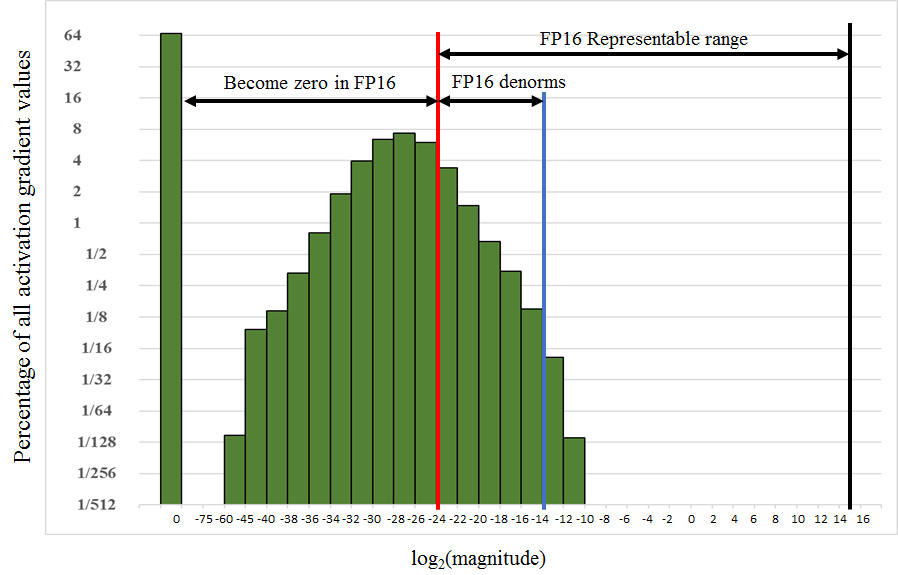

![RFC][Relay] FP32 -> FP16 Model Support - pre-RFC - Apache TVM Discuss RFC][Relay] FP32 -> FP16 Model Support - pre-RFC - Apache TVM Discuss](https://discuss.tvm.apache.org/uploads/default/original/2X/4/450bd810eb5b388a3dc4864b1bdd5f78cd01d2dc.png)